By default, Windows is set to wait 20 seconds for a service to gracefully shut down before it is terminated during a host system shutdown. While in most cases this isn't a big deal, it is a bit of an issue when you are running Virtual Server or Hyper-V and need to wait for all the VMs to enter a saved state before the host shuts down.

Typically, when a Virtual Server or Hyper-V host OS enters shutdown mode and it sends the Stop command to the Virtual Server or Hyper-V services, it will attempt to place all guest VMs into a saved state, essentially freezing whatever they are doing at that moment, and saves the memory to disk so it can be rapidly resumed when the host comes back up (Think Hibernation). Until this is completed, the Virtual Server or Hyper-V services will not enter the stopped state. However, depending on how much memory is allocated to a guest VM that needs to be written to disk or how many guest VMs are entering a saved state and competing for disk I/O, this process may (and probably will) take more than 20 seconds. What happens if the guest VMs can't be saved in time? Think of the effect as "pulling the plug" on the guest VM. It is shut off ungracefully and the contents of memory are lost much like what happens if you pull the plug out of the back of a running server.

Thankfully, there is a way to lengthen the amount of time Windows will wait for services to enter the stopped state before ending them ungracefully. This is done by opening up trusty RegEdit and navigating to HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control where you will find WaitToKillServiceTimeout set to a default of 20000 milliseconds. Double click on WaitToKillServiceTimeout and change the value to a larger number, such as 180000 (3 minutes) and then click OK and close RegEdit. This will tell Windows to wait for 3 minutes for the service to enter the Stopped state before ending it ungracefully. This should give your guest VMs plenty of time to enter the Saved state before a host system shutdown.

NOTE: This setting affects ALL services. So, if the Virtual Server or Hyper-V services have finished stopping but another services is hanging, Windows will wait for the time you specified before killing the service. Keep this in mind if you wonder why Windows isn’t shutting down even though Virtual Server or Hyper-V are all stopped.

Mike Laurencelle

I'm a SharePoint & Server Systems Administrator for Sears Home Improvement Products, headquartered in sunny Longwood, Florida. My primary functions revolve around SharePoint and Virtualization technologies.

I've been in the IT industry now for about 18 years. For me, IT is more than a job to make a living, more than a career to call my own. It's my passion. I am a self proclaimed geek and have interest in all things technology. I can't imagine being in any other field - I absolutely love what I do.

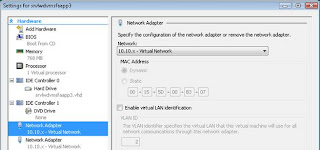

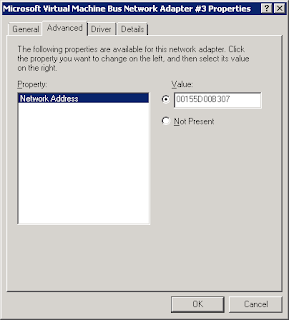

I was trying to create my first Hyper-V VM based NLB cluster and found it is somewhat different from doing the same on either hardware or with Virtual Server 2005. So, I thought I’d share my experience and what worked for me in the hopes that it will help someone else avoid the issues I faced.

We’ve recently been running into a problem with our virtualization infrastructure that I found a solution to in Microsoft Hyper-V: VLAN Tagging.

For our main virtualization infrastructure, we are using HP C-class Blades with 4 NICs, each connected to a different VLAN. These servers are set up as a cluster and this has significantly limited our abilities to virtualize more servers than we have, due to the limited number of VLANs we can connect the cluster to for the guest VMs.

Enter Hyper-V with the ability to do VLAN tagging at the VM level. Now, I can trunk the four connections together to provide redundancy and additional throughput for my bandwidth hungry VMs, assign them to multiple VLANs, and have the VMs tag the VLAN they belong on. Sounds simple but obviously there is a lot of planning and configuration that goes into this, especially in a clustered & complex environment such as ours.

I will be posting a follow-up post with step-by-step instructions for how to do this on a more basic level, but the fundamentals will apply to more complex scenarios.

0